Adaptive Exposure from Luminance Histograms

The Struggle of Auto-Adaptive Luminance

If you've ever implemented standard downsample-based auto-adaptive exposure, you know how annoying it is to keep it from going crazy. It works pretty well for 80% of cases, but the rest of the time it's either eating your sunlight or blowing out your shadows. I revisited this pain point recently when I started reimplementing the post processing stack in my DX12 engine, and reached out online to see what kinds of solutions people are using nowadays. I was recommended to check out histogram solutions which, while not 100% perfect, were far closer than their downsample counterpart. Here, I detail a basic luminance histogram implementation, and my hope is that it's something anyone could take and modify to fit their needs.Why Downsampling Luminance Falls Over

For anyone who hasn't played with it before, the older way of generating the average luminance for your HDR render target is to find the luminance per pixel, and then downsample that luminance until you have a 1x1 final result which contains your average luminance. You then lerp that average frame-to-frame for an adaptive effect. The core issue with simply downsampled luminance comes down to very bright and very dark pixels over-contributing to the final average luminance. These pixels drag the downsample average one way or the other, and it only takes a few of them to completely ruin your luminance-informed tonemapping. It works okay for most situations, and you can clamp the results to a desirable range, but when it falls over, it's noticeable and looks awful.Luminance Histograms to the Rescue

Here's where luminance histograms come in. We create a luminance range and divide it into bins, fill the bins by luminance per pixel, and generate a weighted average with the results. Having the samples in bins also lets you more easily weight certain ends of your luminance spectrum to contribute more or less, depending on what you're trying to achieve. That said, let's dive in! Getting to the final result is done in two compute shader passes: one to build the histogram, and one to average it.Building the Histogram

Let's look at the minimum set of inputs needed to make this work. Of course, we need the HDR input texture (in whatever format you use), and a writable byte address buffer for our luminance histogram. That buffer is created with 256 4-byte (uint32) elements. We'll need the input texture dimensions, the minimum log2 luminance, and the reciprocal of the total log2 luminance range. I'll go over why I use log2 later on, and some decent values to use for the range to get you started.Texture2DNext we'll need some shared memory to store intermediate thread-group histogram bin counts. NUM_HISTOGRAM_BINS is, as you might've guessed, 256.HDRTexture : register(t0); RWByteAddressBuffer LuminanceHistogram : register(u0); cbuffer LuminanceHistogramBuffer : register(b0) { uint inputWidth; uint inputHeight; float minLogLuminance; float oneOverLogLuminanceRange; };

groupshared uint HistogramShared[NUM_HISTOGRAM_BINS];

Finally, the compute shader itself:

[numthreads(HISTOGRAM_THREADS_PER_DIMENSION, HISTOGRAM_THREADS_PER_DIMENSION, 1)]

void LuminanceBuildHistogram(uint groupIndex : SV_GroupIndex, uint3 threadId : SV_DispatchThreadID)

{

HistogramShared[groupIndex] = 0;

GroupMemoryBarrierWithGroupSync();

if(threadId.x < inputWidth && threadId.y < inputHeight)

{

float3 hdrColor = HDRTexture.Load(int3(threadId.xy, 0)).rgb;

uint binIndex = HDRToHistogramBin(hdrColor);

InterlockedAdd(HistogramShared[binIndex], 1);

}

GroupMemoryBarrierWithGroupSync();

LuminanceHistogram.InterlockedAdd(groupIndex * 4, HistogramShared[groupIndex]);

}

HISTOGRAM_THREADS_PER_DIMENSION is 16, meaning our group will be 16x16 = 256 threads (to perfectly fill the bin size) and we execute

a dispatch size large enough to cover the input texture's size. We start by zeroing the group shared memory and syncing. Next, we sample the HDR target, convert the color to a bin index (more on this below), and increment the bin at that index.

If you're newer to compute shaders, there's a few things here that might catch your eye. The "if" block is so that threads that go past the end of the input dimensions don't

contribute to the histogram. This happens when your input dimensions don't perfectly divide by the group size. Next is the InterlockedAdd, which is done so that we don't hit a race

condition with two threads within the group incrementing the same bin. Finally, why accumulate to the bins in shared memory instead of the target histogram? This is because accessing shared memory is faster than

accessing target resources. After this block, we sync to make sure all our accumulation is done, and then finally add our bin counts to the histogram target. We need to InterlockedAdd here too

so that multiple thread groups don't hit race conditions adding to the same bins.

Let's look at how we found that bin index from the HDR color:

float GetLuminance(float3 color)

{

return dot(color, float3(0.2127f, 0.7152f, 0.0722f));

}

uint HDRToHistogramBin(float3 hdrColor)

{

float luminance = GetLuminance(hdrColor);

if(luminance < EPSILON)

{

return 0;

}

float logLuminance = saturate((log2(luminance) - minLogLuminance) * oneOverLogLuminanceRange);

return (uint)(logLuminance * 254.0 + 1.0);

}

First, we do the same standard luminance dot product that the other luminance

solutions use. If it's close enough to 0 we just return 0 to avoid log2-ing 0. We then take the log of the luminance, and map it to our log luminance range

resulting in a 0.0 - 1.0 value. Lastly we convert that to a bin index range of 1 - 255 (the 0th index is for those close-to-zero values from before).

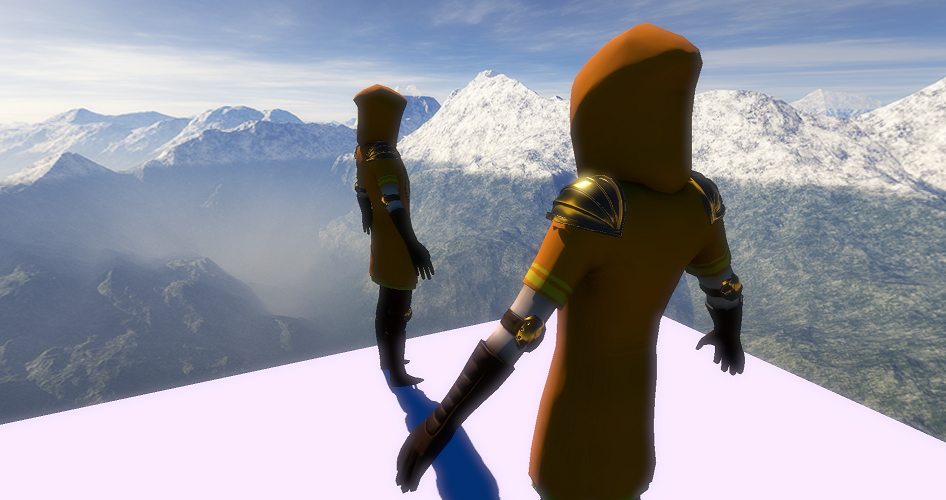

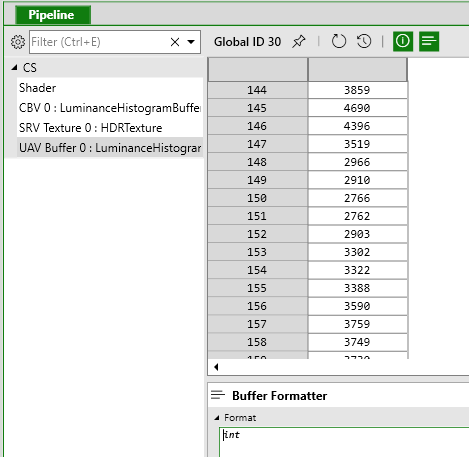

And that's it for the first step, our histogram is built! If we just execute this shader and capture it with PIX, it becomes clearer why I choose log2 for this.

It's really only because it allows for a more even distribution among the bins in my use case, which makes it easier to modify the weights of bins at certain ends of the spectrum, should you find you need to (and you probably will at some point). If you just output straight luminance, you'll find it clumps up a lot more, which makes selective weighting more difficult. Log10 is also fine, I just personally saw better distribution with log2. Your mileage may vary there, so definitely play around with that. There are a number of resources that thoroughly cover why you should probably use log-based luminance rather than standard luminance, so I won't be covering that here. As for minLogLuminance, I use -10.0, and a max of 2.0, making the luminance range 12.0, and oneOverLogLuminanceRange = 1.0 / 12.0.

Averaging the Histogram

Next we just have to find the average of this histogram, which we'll do with another compute shader. Let's begin like before, with the inputs:RWByteAddressBuffer LuminanceHistogram : register(u0); RWTexture2DFirst we have the histogram from the previous step, and we also have the luminance output target. This target is a 1x1 32-bit float writable texture. Next in the constant buffer is the pixel count of the HDR input texture from the previous pass, the same minimum log value as before, and the range. We also have the timeDelta and tau values for adapting the luminance changes over time. Time delta is your frame-to-frame time delta as you'd expect, and you can use 1.1 as a good starting tau value. This is a very common style of adapting luminance changes and works well here. Again, we'll need some shared memory to read in the histogram:LuminanceOutput : register(u1); cbuffer LuminanceHistogramAverageBuffer : register(b0) { uint pixelCount; float minLogLuminance; float logLuminanceRange; float timeDelta; float tau; };

groupshared float HistogramShared[NUM_HISTOGRAM_BINS];

And now, the shader:

[numthreads(HISTOGRAM_AVERAGE_THREADS_PER_DIMENSION, HISTOGRAM_AVERAGE_THREADS_PER_DIMENSION, 1)]

void LuminanceHistogramAverage(uint groupIndex : SV_GroupIndex)

{

float countForThisBin = (float)LuminanceHistogram.Load(groupIndex * 4);

HistogramShared[groupIndex] = countForThisBin * (float)groupIndex;

GroupMemoryBarrierWithGroupSync();

[unroll]

for(uint histogramSampleIndex = (NUM_HISTOGRAM_BINS >> 1); histogramSampleIndex > 0; histogramSampleIndex >>= 1)

{

if(groupIndex < histogramSampleIndex)

{

HistogramShared[groupIndex] += HistogramShared[groupIndex + histogramSampleIndex];

}

GroupMemoryBarrierWithGroupSync();

}

if(groupIndex == 0)

{

float weightedLogAverage = (HistogramShared[0].x / max((float)pixelCount - countForThisBin, 1.0)) - 1.0;

float weightedAverageLuminance = exp2(((weightedLogAverage / 254.0) * logLuminanceRange) + minLogLuminance);

float luminanceLastFrame = LuminanceOutput[uint2(0, 0)];

float adaptedLuminance = luminanceLastFrame + (weightedAverageLuminance - luminanceLastFrame) * (1 - exp(-timeDelta * tau));

LuminanceOutput[uint2(0, 0)] = adaptedLuminance;

}

}

HISTOGRAM_AVERAGE_THREADS_PER_DIMENSION is 16, so once again we have a group of 16x16, but we only dispatch a single group this time. The first step

is to get the weighted value for the particular histogram bin. We read in the count, and then multiply it by the bin index for every bin and store it

in shared memory. Then we sync the threads, and in the for-loop we do a standard technique used in lots of compute shader reductions in order to gather

the summed up histogram value, which will be stored in HistogramShared[0]. Then, only for the one thread, we get the weighted log average by dividing the

total histogram value by the number of pixels. countForThisBin for groupIndex 0 will be the count of the 0th bin, and as a starting point, we don't want

super-dark pixels to contribute to the histogram average and drag down the average luminance (you may decide you want to, this is just an example). We then

reverse the calculation in HDRToHistogramBin to get the weighted average luminance for this frame. We then read in the luminance from the previous frame (currently stored

in the target), adapt from that value to the current luminance value, and output it to the luminance texture. And, that's it, we're done!

Next Steps

At this point, we have a working histogram average luminance that we can swap in the place of any existing standard luminance downsample average. The next step to explore in your own tests is to modify the histogram weights to achieve the look you want. This can be as simple or as complex as you want. Personally, I find it useful to create some kind of curve around an expected luminance value, and weigh the histogram bins by how close they are to that target. Doing so still allows a useful amount of adapted luminance, but prevents you from straying too far in one direction or the other, and the histogram average as a whole keeps you from varying too wildly.Performance-wise, this is just a simple example to get started with, so undoubtedly there can be some optimizations here. As it stands, this processes a full 1080p HDR target in ~0.17ms on my 2080. A standard compute luminance reduction on the same card runs in about 0.095ms, so the older solution is still faster. A quick optimization would be to just use a downsampled HDR target to build the histogram (half size or less), since the majority of the cost is in the sheer volume of interlocked histogram accumulation. The trade off is that your average will shift more than it would overwise, but that may be acceptable.

Hopefully you've found this useful!